Behind the Glitch: A Fourier Breakdown of AI Music Artifacts

Generative AI has transformed how we create music, but it’s not without its challenges. One of the key issues in AI-generated music is the presence of artifacts—unwanted glitches that can affect the quality of the music. These artifacts may include distortion, rhythmic glitches, or frequency imbalances that sound unnatural.

To understand why these glitches happen, we turn to Fourier analysis, a powerful tool used in signal processing to break down complex audio signals into their component frequencies. This post will explain how Fourier analysis can help us identify and fix AI music artifacts, making the process of AI-generated music cleaner and more professional.

What Are AI Music Artifacts?

Artifacts in AI music are unintended imperfections caused by the AI’s limitations. These glitches can manifest as:

- Audio distortion: Harsh, unpleasant sounds that interrupt the flow.

- Noise artifacts: Background static or buzz.

- Rhythmic issues: Unnatural timing or pacing between instruments.

- Frequency imbalances: Unnatural tone qualities due to improper frequency synthesis.

These are typically caused by imperfect training data or algorithmic limitations of the AI model.

Fourier Analysis: Breaking Down Sound

The Fourier Transform is a mathematical tool that decomposes sound into its individual frequencies. By analyzing the audio in the frequency domain, we can pinpoint where the artifacts occur and address them.

- Step 1: Convert the audio signal into its frequency components using Fourier analysis.

- Step 2: Identify problematic frequencies where the artifacts are most noticeable.

- Step 3: Apply filters or smoothing techniques to remove or reduce the unwanted frequencies.

How AI Models Cause Artifacts

AI music tools like Amper Music or AIVA generate music by analyzing patterns in large datasets of existing music. However, these models may produce glitches due to:

- Limited data or bias in training sets.

- Inconsistent AI algorithms that struggle with complex transitions or frequencies.

- Overfitting, where AI models fail to generalize musical nuances effectively.

Fixing AI Music with Fourier Techniques

To address AI music artifacts, we can apply Fourier-based filtering techniques that remove unwanted frequencies. Real-time audio processing and better AI model training can also help minimize glitches and improve music quality.

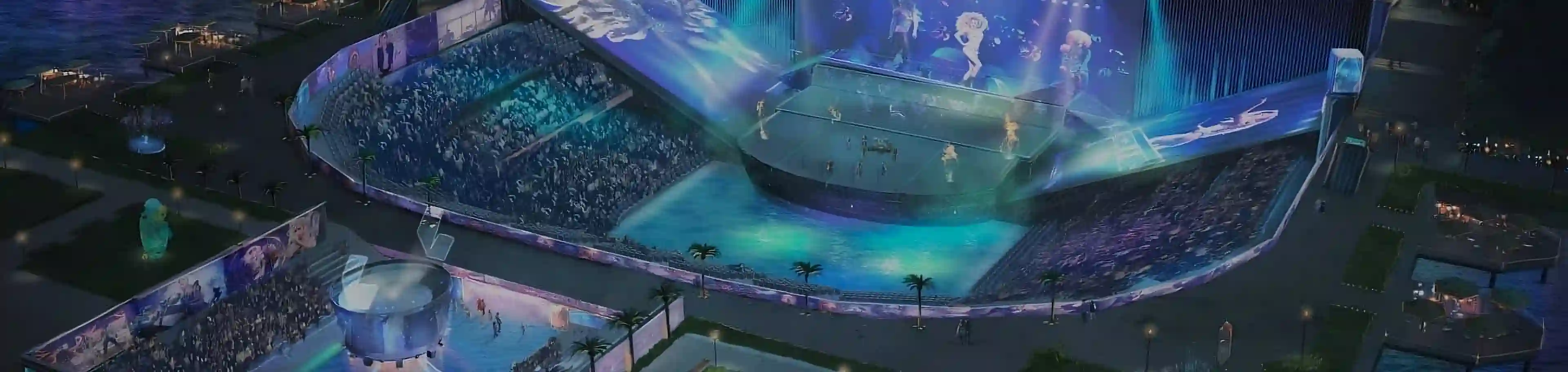

CEEK’s Role in AI Music

CEEK helps creators navigate these AI challenges by offering tools to monetize and share their AI-generated music. Through blockchain technology and CEEK Tokens, creators maintain ownership and earn revenue from their work, all while ensuring high-quality production with AI-powered enhancements.

Conclusion

AI music creation is revolutionizing the industry, but artifacts remain a challenge. By using Fourier analysis to identify and correct glitches, AI-generated music can reach studio-quality levels. With platforms like CEEK, creators have the tools to perfect and monetize their work, ensuring a future where AI-driven music is both creative and professional.